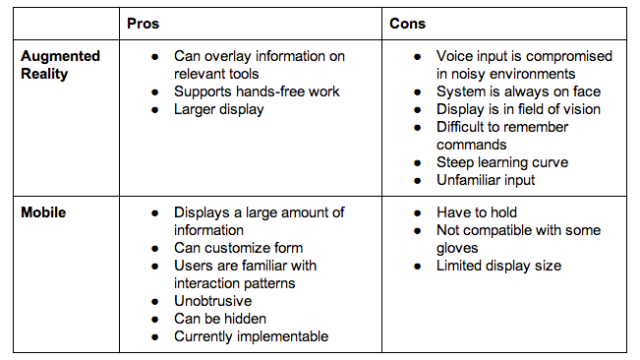

This was the first week in which we focused exclusively on our mobile prototype, after having ruled out augmented reality the previous week. We tested a mid-fi prototype on three members of the HCI group, including one designer and two developers. Adam and Derin also started development in tandem using several existing javascript frameworks including angular.js, node.js, mongodb, and ionic. Here’s our rationale for using these frameworks:

- Angular uses a model view controller capability in tandem with the ionic framework to help with the development and makes passing data to and from the front-end easier.

- MongoDB facilitates integrating a document-based database with our application.

- Node lets us create our own server and send data between the back and front end.

CHANGES TESTED IN MID-FI PROTOTYPES:

Jobs

- Ability to create templates from previous jobs

- Separated Jobs into three sections: My jobs, Other jobs, Templates

Notes

- Shortcut for creating notes from a top-level “Add” button in the menu

- Created a “Recently Used” section

Checklists

- Ability to mark an item as complete

Clean room checks

- When users mark a job as complete, check the workspace to see if they left any tools behind and send a notification if the system detects something

(Screenshot of the revamped Jobs page, which includes My Jobs, All Jobs in Progress, and Job Templates sections)

(Screenshot of the screen users saw after selecting a job from the list)

KEY TAKEAWAYS:

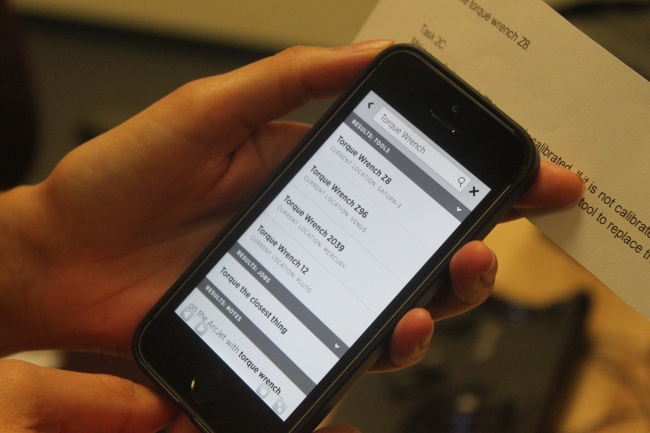

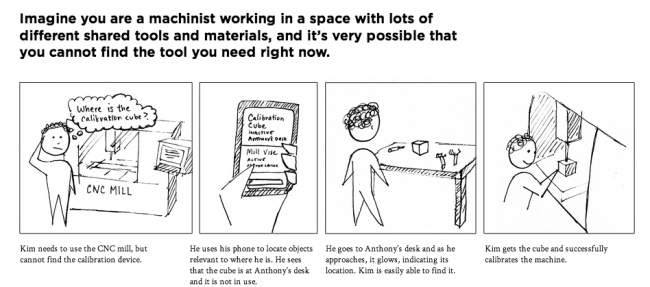

- Clarify that the search function can be used to find tools – one participant indicated that he did not use the search bar to find tools because he thought it was only for searching high-level menu items (e.g., Jobs + Notes )

- Clarify how the back button works – participants were unsure whether pressing the back button returns them to screen they were previously on. In the version we tested, pressing the button returned them to the top-level menu item they were previously on (eg, Jobs, Notes). In the latest version, pressing the button will take them to the screen they were previously on only within a top-level section.

- Clarify how checkboxes work – participants were unsure what marking a job as complete does. Moreover, one participant incorrectly guessed that marking a note as complete would make the associated job complete.

- Make warnings more actionable – instead of simply telling users during a clean room check that they left a tool behind, indicate the tool’s current location and where it belongs.

- Include more feedback and affordances – several examples emerged from our user tests.

- EX 1: a participant wanted confirmation that marking a job as complete notified participants of his work progress.

- EX 2: participants wanted confirmation that jobs were automatically saved after they created them.

- EX 3: participants didn’t realize that they could click a tool to see an expanded set of info about it.

We’re taking a break from user testing this Friday because of the holidays (and the World Cup quarterfinals.. ) but plan to test our first hi-fi prototype next week on target users. Stay tuned!